What Is Linear Algebra?

Discover how linear algebra simplifies complex data into elegant solutions. Explore matrix operations, eigenvalues, and advanced applications in engineering and data science.

Linear algebra, as an important branch of mathematics, explores vectors, matrices and linear mappings as well as solutions of linear systems. Imagine planning an outing where transportation, meals, and accommodation must all be coordinated - this requires finding an equitable balance among multiple variables; linear algebra helps us understand and address such complex situations by solving equations of this form. For instance, you might use systems of equations of the form Ax = b. Where matrix A depicts relationships among various factors, and vectors x and b represent specific numerical requirements, respectively.

In this article, we will gradually cover the essential definitions, main branches, numerical algorithms, and cross-disciplinary applications of linear algebra. Through real-life examples and intuitive formulae, our aim is to help readers grasp its core essence while increasing their practical problem-solving skills - whether they be students, engineers, or data scientists! - linear algebra will become a must-have tool.

Linear Algebra Fundamentals and Definitions

Definitions and Basic Concepts of Linear Algebra

Vectors, Matrices, and Linear Functions

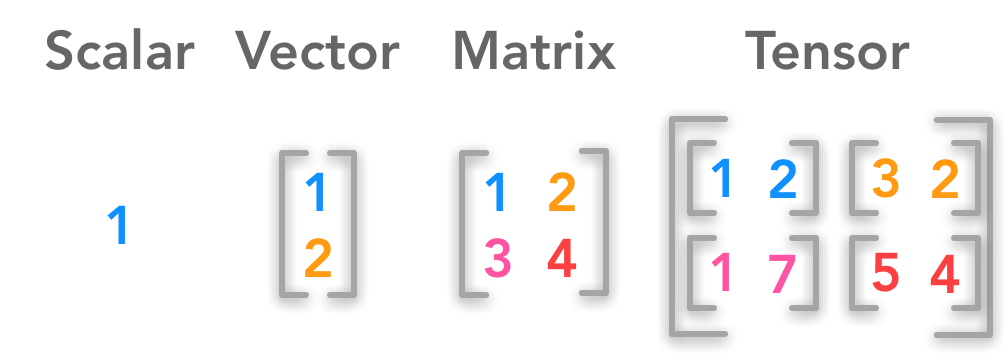

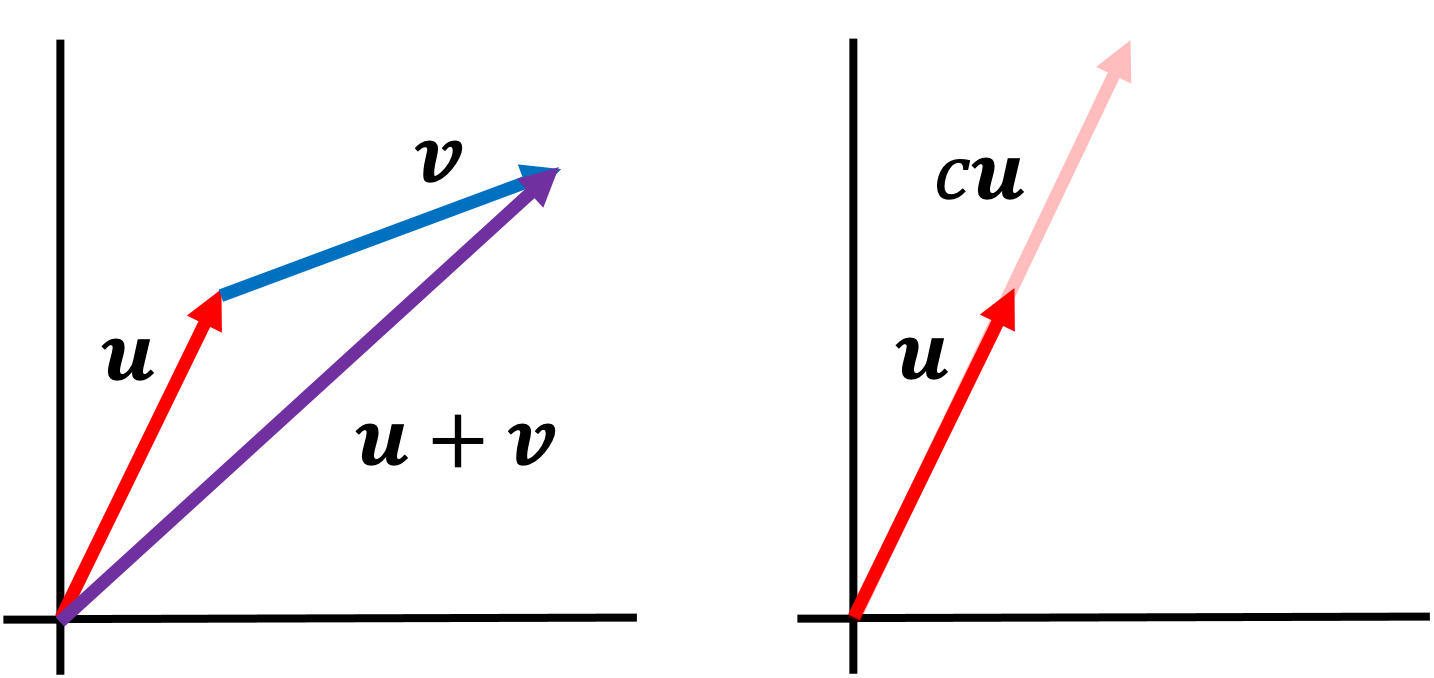

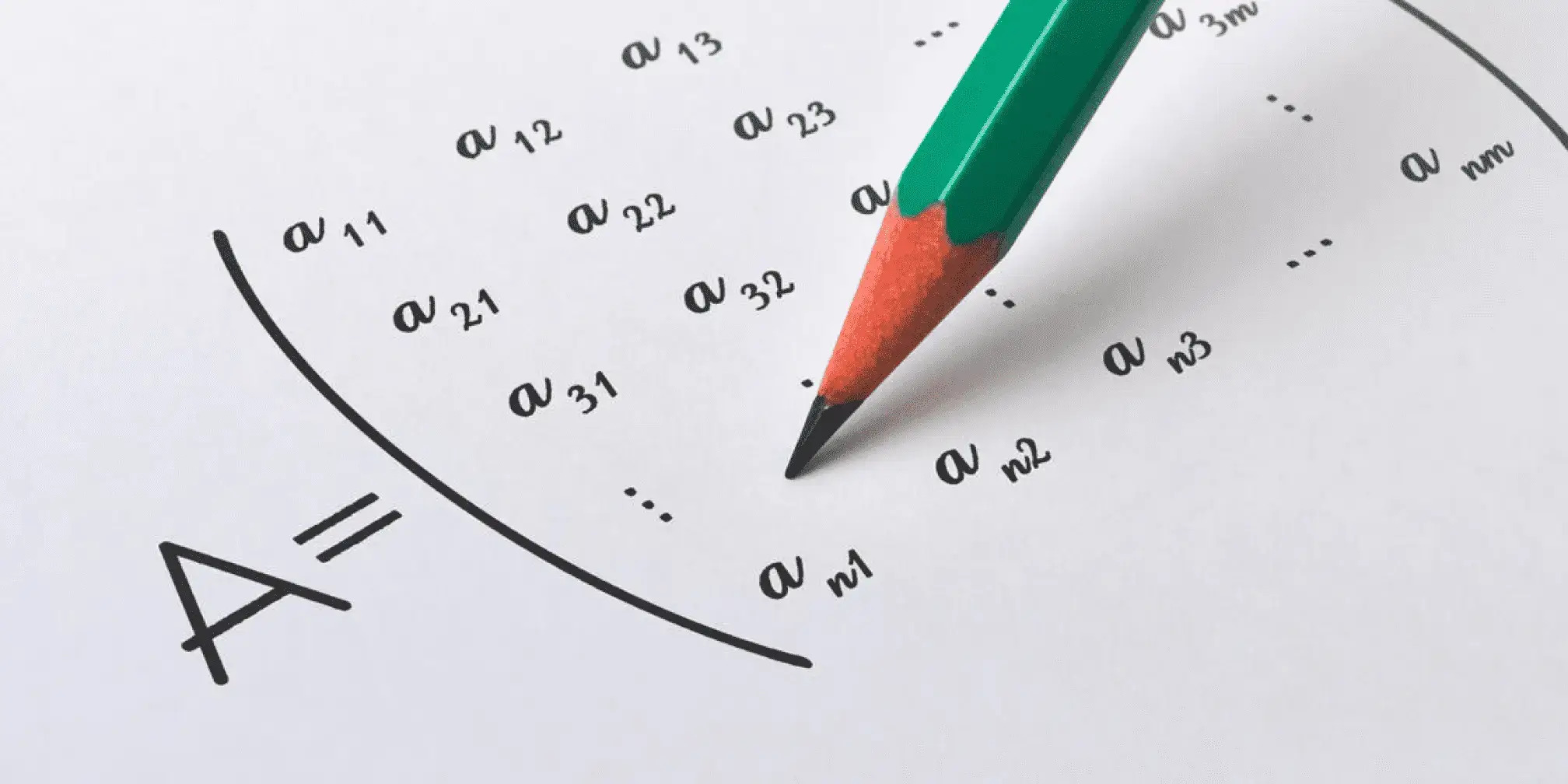

At the core of linear algebra lies an abstract concept known as a vector, which serves not only to represent a directed line segment in space but also as an essential tool to describe quantities and directions. Vectors are commonly represented with their abbreviation \(\vec{v}\), with all vectors belonging to any set closed under addition and multiplication operations. A matrix represents data as well as transformations with its form being determined by rows and columns that make up its matrix representation.

\(A=\begin{pmatrix} a_{11} & a_{12} & \cdots & a_{1n} \\ a_{21} & a_{22} & \cdots & a_{2n} \\ \vdots & \vdots & \ddots & \vdots \\ a_{m1} & a_{m2} & \cdots & a_{mn} \end{pmatrix}\)

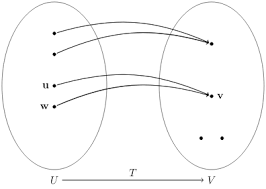

Based on the relationship between matrices and vectors, linear functions (or linear transformations) may be mathematically defined as mappings that satisfy two conditions \(f: V \rightarrow W\) that satisfies:

\(f(c_1 \vec{v}_1+c_2 \vec{v}_2)=c_1 f(\vec{v}_1)+c_2 f(\vec{v}_2),\)

Linear algebra provides engineers and scientists alike a tool that converts complex data relationships to manageable operational formulae, providing theoretical support to solve difficult engineering and science-related problems.

Linear Equations and Their Solutions

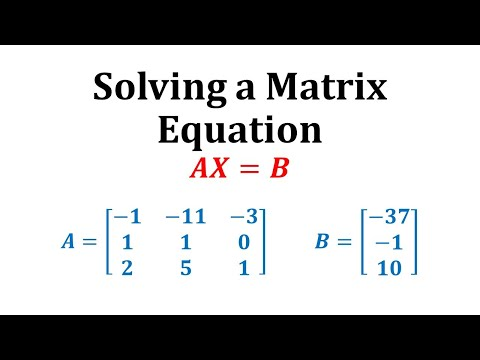

Matrix equations are among the most frequently employed mathematical models used for linear systems, featuring.

\(Ax=b,\) (or similar formulae).

Where \(A\) represents the coefficient matrix, \(x\) is an unknown vector, and \(b\) represents a constant vector. There are various solutions for solving such equation systems, including Gaussian elimination, matrix inversion, and iterative methods; Gaussian elimination has its core idea in using elementary row operations to transform coefficient matrices to an upper triangular form and gradually back-solve for unknowns by gradually backsolving for them over time. If the matrix \(A\) is nonsingular, its solution can be expressed as

\(x=A^{-1}b.\)

This not only illustrates its uniqueness but also emphasizes how important matrix operations can be when applied practically, helping us better comprehend complex phenomena.

Vector Spaces

Properties of Vector Spaces and Subspaces

Vector spaces are essential concepts in set theory, defined as sets containing vectors together with operations of addition and multiplication that satisfy eight fundamental axioms. These axioms ensure that any sum or product between vectors within a space still belongs within that same space, and any combination of vector and scalar remains within that same space; an example would be all \(n\)-dimensional vectors over real number field \(\mathbb{R}\) forming one such space. Subspaces in vector spaces often serve to simplify problem analysis while also giving insight into its structure through concepts like basis and dimension. Subspaces not only aid problem resolution, but can provide invaluable structural data by way of closure properties like addition and multiplication.

Linear Mappings and Linear Independence

An important bridge for linking vector spaces together is linear mapping, which fulfills its mapping property:

\(T(c_1 \vec{v}_1+c_2 \vec{v}_2)=c_1 T(\vec{v}_1)+c_2 T(\vec{v}_2).\)

This property of linear independence allows us to accurately model mapping processes using matrices and analyze how transformation affects space structure. On the other hand, linear independence serves as the essential criterion to establish whether vectors can form a basis. If a set of vectors \(\{\vec{v}_1,\vec{v}_2,\cdots,\vec{v}_k\}\) satisfies

\(c_1\vec{v}_1+c_2\vec{v}_2+\cdots+c_k\vec{v}_k=0 \Rightarrow c_1=c_2=\cdots=c_k=0,\)

Then, this set of vectors is said to be linearly independent; this property allows them to form the basis and determine the dimension of a vector space.

Branches of Linear Algebra

Elementary Linear Algebra

Basic Matrix Operations and Solving Linear Systems

Elementary linear algebra emphasizes basic operations of matrices and their applications to solving systems of linear equations, with addition, multiplication, transposition, and multiplication, among others, being considered key operations of elementary linear algebra. An \(A\) and a \(B\)matrix would typically be defined by:

\((AB)_{ij}=\sum_{k=1}^{n} a_{ik}\, b_{kj},\)

where \(a_{ik}\) and \(b_{kj}\) are the elements of the \(i\)th row \(k\)th column of matrix \(A\) and the \(k\)th row \(j\)th column of matrix \(B\), respectively. such operations allow us to describe mapping relationships in combinatorics as well as transformation processes with data.

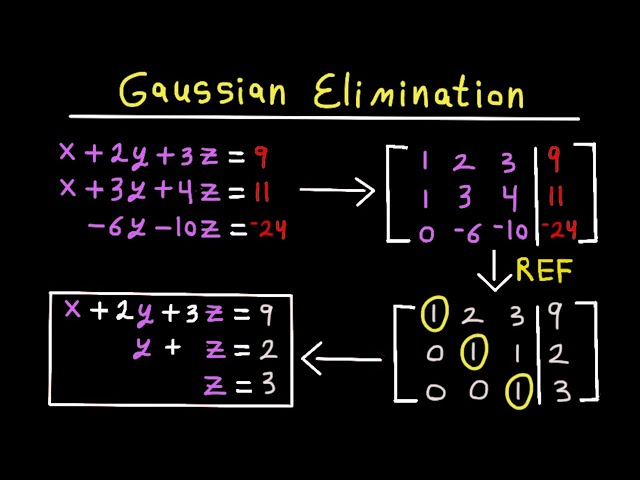

Gaussian elimination is an extremely useful technique when solving systems of linear equations; this approach applies a series of elementary transformations to convert an equation matrix to upper triangular or echelon form before back-substitution can be used to fill any unknowns in. Consider an instance in which you need to solve multiple linear systems simultaneously using this strategy:

\(Ax=b,\)

Where \(A\) represents the coefficient matrix and \(b\) the constant vector, by employing elementary row operations, we can transform matrix \(A\) into an upper triangular matrix \(U\), thus producing an intermediate constant vector called \(c\), gradually solving for vector \(x\). When matrix \(A\) is nonsingular, its unique solution can be expressed using symbolic notations:

\(x=A^{-1}b.\)

While the inverse matrix method is relatively fast and economical to compute, its computational cost can be costly; consequently Gaussian elimination has become popular as a mathematical technique to deepen our understanding of matrix structures as well as provide a solid basis for engineering and scientific computations.

Advanced Linear Algebra

Eigenvalues, Eigenvectors, and Singular Value Decomposition

Advanced linear algebra delves deeper into matrix analyses, including eigenvalues, eigenvectors, and singular value decomposition (SVD). For an \(n \times n\) matrix \(A\), if there exists a nonzero vector \(x\) and a scalar \(\lambda\) such that

\(Ax=\lambda x,\)

Once identified, an eigenvalue of \(A\) can be represented as an integer number called \(\lambda\), and its respective eigenvector is often known as \(x\). To solve an eigenvalue problem, one typically computes its determinant.

\(A-\lambda I,\)

and then solves the characteristic equation

\(\det(A-\lambda I)=0,\)

An exhaustive eigenvalue calculation allows one to learn of all possible Eigenvalues of any matrix. It is closely connected with issues of system stability and vibrational modes in engineering problems and scientific research.

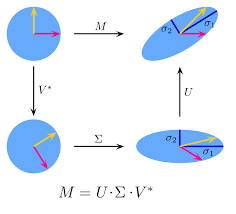

Singular Value Decomposition (SVD) is an advanced matrix decomposition method that expresses any matrix as the product of three matrices. More precisely, for any \(m \times n\) matrix \(A\), there exists an orthogonal matrix \(U\) and \(V\), as well as a diagonal matrix \(\Sigma\), such that

\(A=U\Sigma V^{T},\)

SVD not only theoretically establishes matrix decomposition's universality but it's also widely applied in signal processing, data compression, and engineering projects to reduce dimensionality and dimension reduction.

Advanced linear algebra's ability to reveal the inherent spectral structure in matrices helps us more fully appreciate and interpret data's essential characteristics. Decomposition techniques like Eigen decomposition or Singular Value Decomposition offer powerful methods for solving complex issues related to big data analytics, image processing, and machine learning.

Numerical and Applied Linear Algebra

Numerical Algorithms

Gaussian Elimination Method

Numerical algorithms, an integral component of modern computational mathematics, serve to solve linear algebra models encountered in practice using approximate numerical solutions. Gaussian elimination, one such n-dimensional elimination algorithm, works by employing elementary operations on lower triangular parts of matrixes in order to remove elements in the lower triangular part and thus transform the system of linear equations to upper triangular form, e.g., For an illustration, consider this linear system represented as:

\(Ax=b,\)

Through a sequence of elementary row operations, matrix \(A\) can be converted to upper triangular matrix \(U\)and yielded as an equivalent system.

\(Ux=c.\)

Back-substitution can then be employed starting with the final row to sequentially calculate approximate values of unknown variables. Although highly effective for solving small-scale and medium-dimensional issues, its numerical instability could become problematic when working on large problems requiring high dimensions requiring many unknowns requiring approximate solutions; more stable methods like QR decomposition may therefore prove more suitable in these instances.

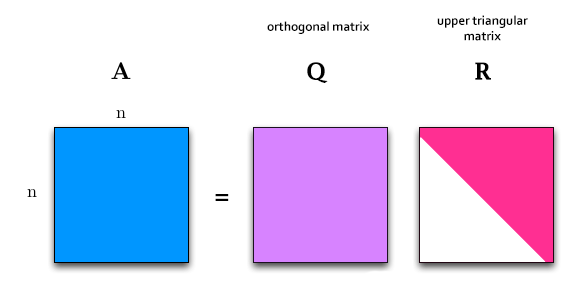

QR Decomposition and Iterative Methods

QR decomposition is a technique that decomposes a matrix into the product of an orthogonal matrix \(Q\) and an upper triangular matrix \(R\), i.e.,

\(A=QR,\)

where the orthogonal matrix \(Q\) satisfies

\(Q^TQ=I,\)

And \(R\) is an upper triangular matrix. QR decomposition can be used not only to solve linear systems but is also widely applied in finding numerical solutions to least squares problems and eigenvalue issues. When solving least squares issues, the goal should be to locate a vector \(x\) which minimizes the error norm.

\(\|Ax-b\|_2,\)

And by utilizing QR decomposition, the system can be transformed into

\(Rx=Q^Tb,\)

Allowing for a rapid solution through back-substitution.

In addition, iterative numerical methods (such as Jacobi and Gauss-Seidel methods) provide excellent solutions to sparse matrix systems for large-scale problems while providing higher precision solutions with decreased memory consumption. This makes these techniques highly suitable for engineering use cases.

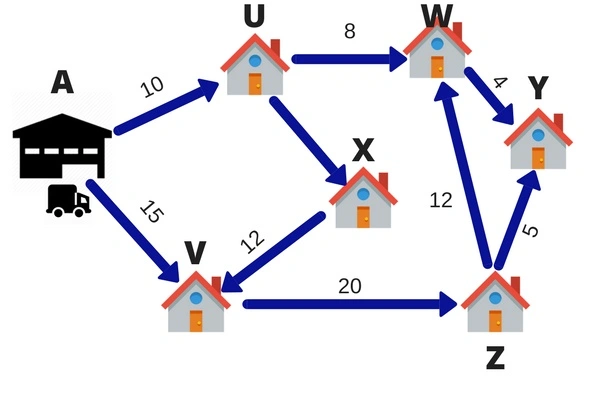

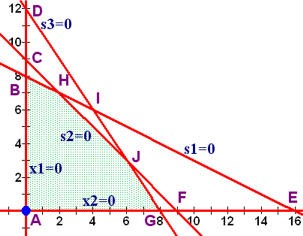

Linear Programming and Its Applications in Engineering and Economics

Linear Programming (LP) is an indispensable method for optimizing (maximizing or minimizing) linear objective functions subject to constraints. A basic linear programming problem typically takes the form of an explicit formula:

\(\begin{aligned} \text{Objective:} & \quad \min_{x} \; c^T x, \\ \text{Constraints:} & \quad Ax \leq b,\\ & \quad x \geq 0, \end{aligned}\)

where \(c\) stands for the coefficient vector of an objective function, \(A\) represents a constraint matrix, \(b\) stands for constant vector and \(x\) denotes decision variable vector. Linear programming models allow decision-makers to find optimal solutions under limited resource constraints by abstracting real-world problems into linear programming models and finding optimal solutions under these limited resource constraints.

Economic linear programming has many practical uses in optimizing investment portfolios, controlling costs, and developing market equilibrium models. One classical method for solving linear programming problems - known as the simplex method--iteratively moves from vertex to vertex in search of optimality using basic solutions of linear systems as a basis of creating simplex tableau until all optimality conditions have been fulfilled. Modern solvers frequently combine interior-point methods into this solution approach in order to meet computational demands posed by larger problems.

Numerical and applied linear algebra provides efficient numerical algorithms, theoretical support, and practical solutions for optimization problems in engineering and economics. From solving large-scale linear systems to production optimization and market regulation, these methodologies prove their worth with high practical value and promising application prospects.

Cross-Disciplinary Applications of Linear Algebra

Applications in Machine Learning and Data Science

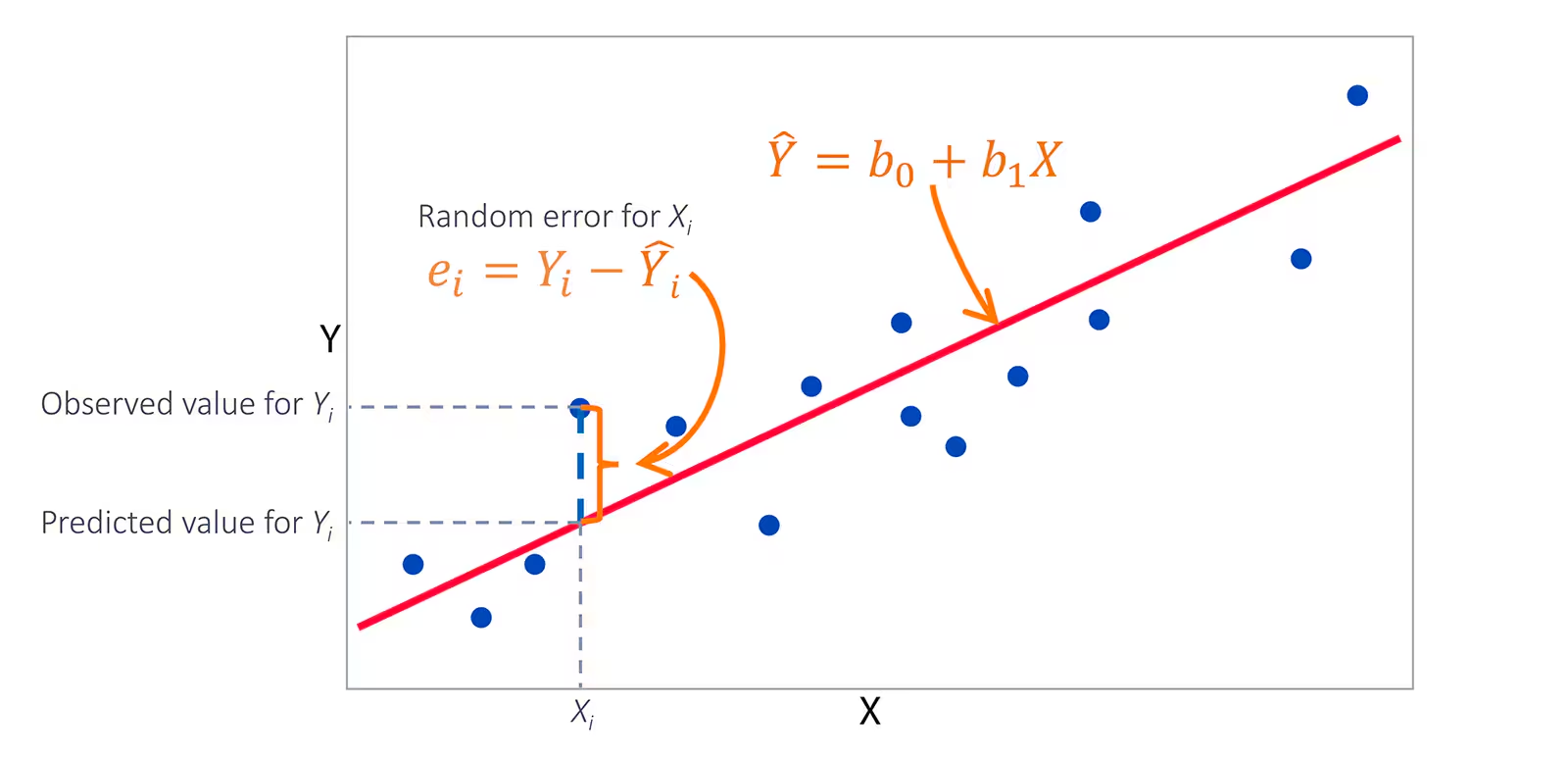

Linear algebra has long been considered an indispensable theoretical basis in machine learning and data science, serving as the backbone for numerous machine learning models built upon matrix operations and vector space theory. When solving linear regression using least squares regression methods such as Least Squares Method or other optimization strategies, we often need to address optimization challenges like:

\(\min_{w}\|Xw-y\|_2^2,\)

Where \(X\) represents a matrix of sample features, \(w\) represents an unknown weight vector to be determined and \(y\) refers to a target variable. In order to solve it through normal equations.

\(X^TXw=X^Ty,\)

The analytical solution can be expressed as

\(w=(X^TX)^{-1}X^Ty,\)

An effective approach for parameter estimation involves employing matrix inversion and multiplication techniques for parameter estimation.

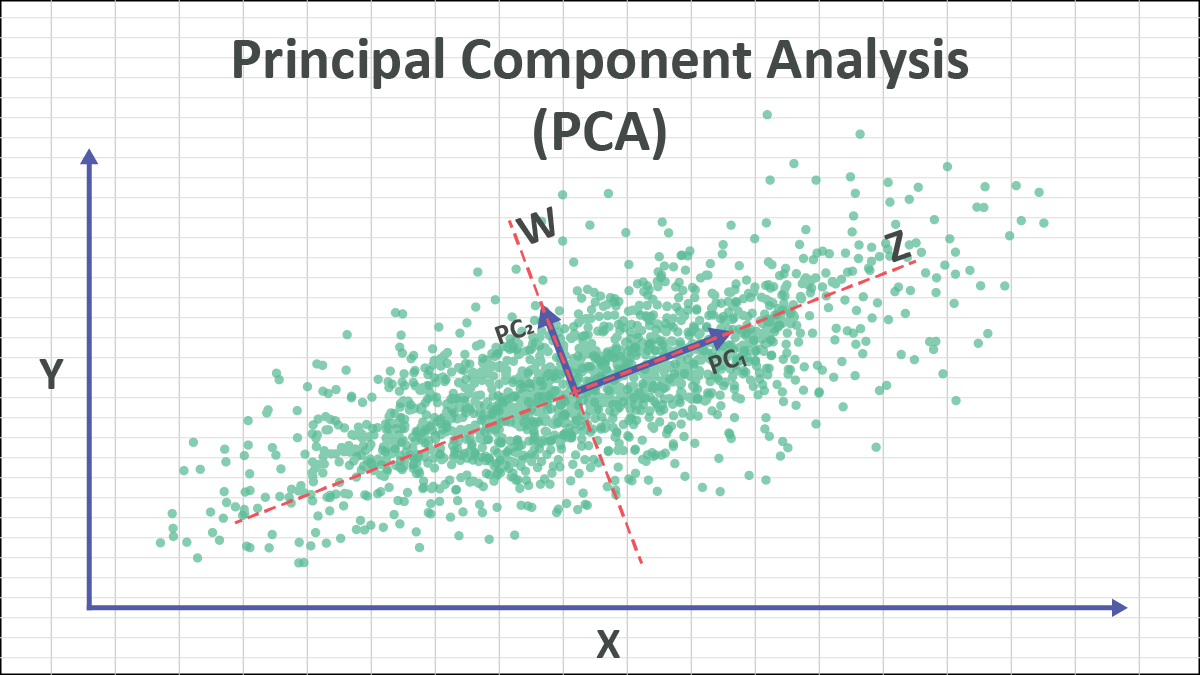

Principal Component Analysis (PCA), used for data dimensionality reduction, extracts the most essential components by performing an eigenvalue decomposition on its covariance matrix. If we assume \(X\), with all its rows assumed centered, then its covariance matrix is given by

\(C=\frac{1}{n-1}X^TX.\)

By solving the eigenvalue and eigenvector problem for \(C\), one can select \(k\) largest eigenvectors as projection bases to achieve both dimension reduction and compression of data. Singular value decomposition (SVD) plays an integral part in recommendation systems, image processing, and text mining; its decomposition formulae are given here:

\(A=U\Sigma V^T,\)

Data modeling tools offer a powerful solution for understanding data structures.

Gradient computations used when training neural networks or complex models rely heavily on matrix calculus. Forward and backward propagation between layers essentially involves matrix multiplications and derivative operations - for instance, in a simple feedforward neural network, the computation could be represented as:

\(y=f(Wx+b),\)

Where \(f\) represents an activation function and \(W\) and \(b\) represent weight matrix and bias vector, respectively, vectorizing operations allow one to not only use hardware acceleration more efficiently but also simplify multi-layered model differentiation processes more smoothly.

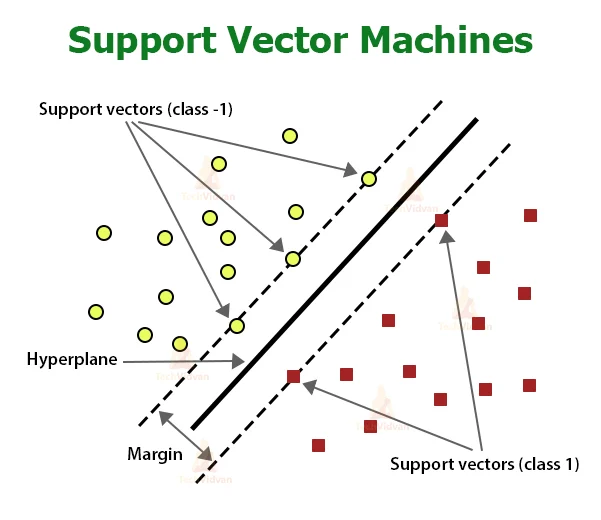

Support vector machines, clustering algorithms (such as K-means), and image recognition convolution operations all rely heavily upon linear algebra theory for support and computation purposes. Linear algebra tools and methods offer data scientists and machine learning experts invaluable theoretical support and computational framework when managing large volumes of data or creating efficient algorithms.

This article provided an introduction to linear algebra, matrix operations, numerical algorithms and their cross-disciplinary applications through examples and formulae. Through them it demonstrated how this discipline provides great practical value in engineering modeling, economic optimization and data science - inspiring innovative thought while solving real world issues while furthering future development!

reference:

https://en.wikipedia.org/wiki/Linear_algebra